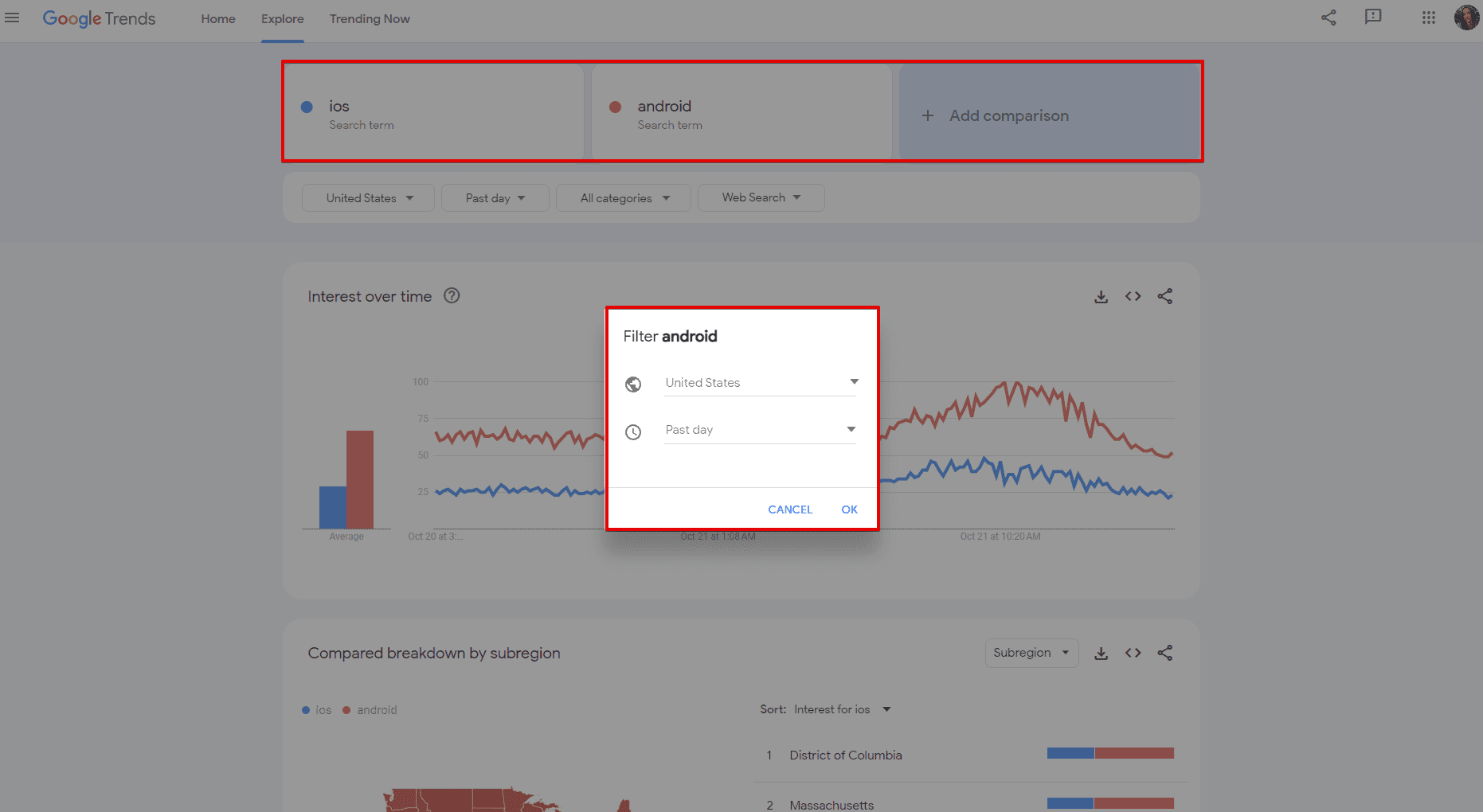

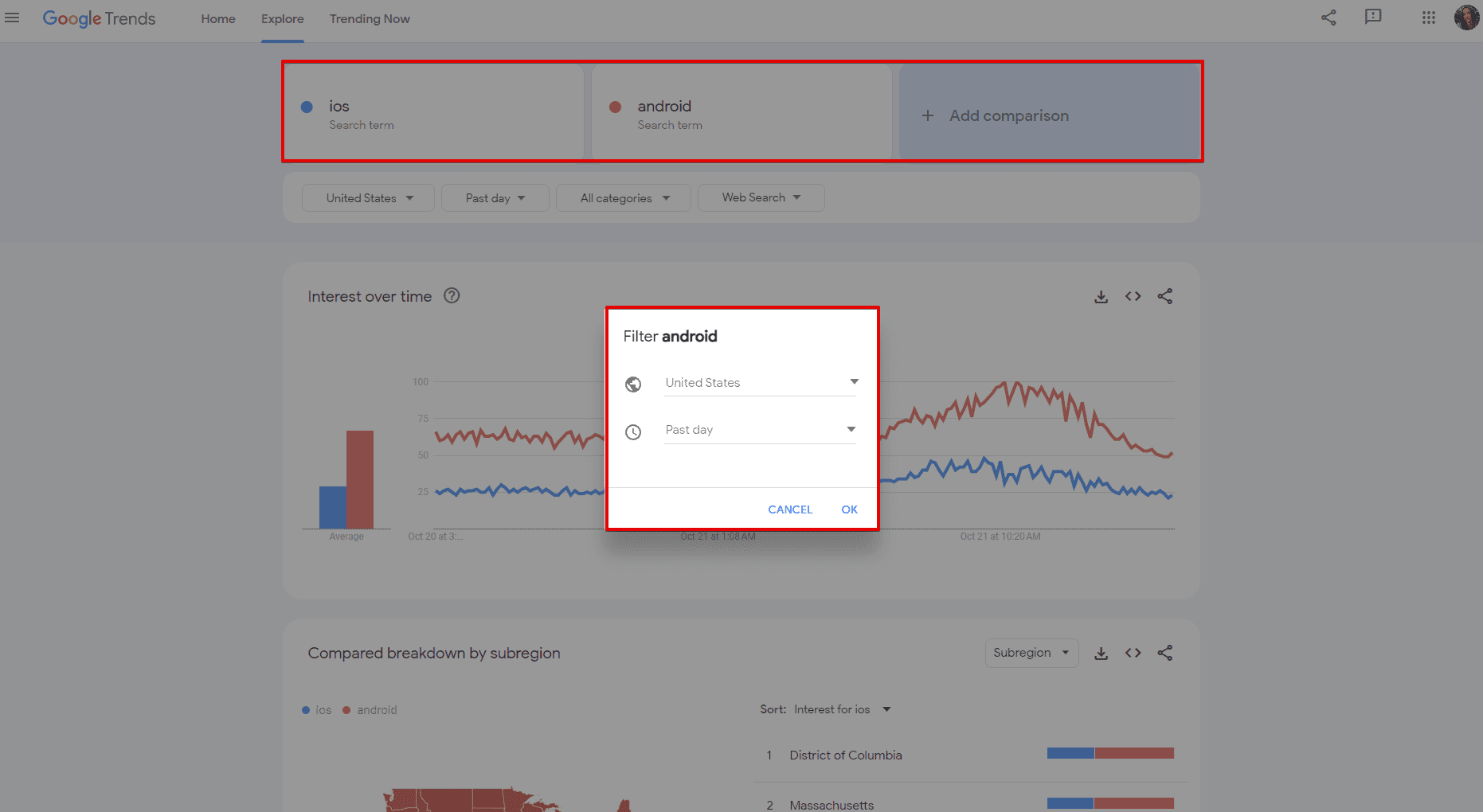

Google Trends, a vital tool for marketers, journalists, and researchers worldwide, recently experienced a frustrating disruption when its four-hour filter feature stalled unexpectedly. This glitch prevented users from accessing the most recent trending data, leaving many dependent on real-time insights in the dark. The sudden interruption has sparked widespread concern among professionals who rely on timely information to track emerging topics, analyze consumer behavior, and make data-driven decisions. Google’s support channels received an influx of complaints as users sought clarity and quick resolution to the issue.

The four-hour filter on Google Trends is crucial for capturing short-term spikes in search interest, providing valuable snapshots of evolving public attention. Its malfunction not only hampered access to fresh data but also raised questions about the reliability and robustness of Google’s analytics infrastructure. Industry experts noted that such outages, even if brief, could disrupt time-sensitive campaigns and news reporting. The lack of immediate communication from Google added to the uncertainty, prompting calls for improved transparency and contingency measures in their data services.

Users across various sectors expressed their frustration on social media and professional forums, highlighting how the glitch impeded their ability to monitor breaking trends and consumer sentiment. Some digital marketers reported setbacks in campaign optimizations, while journalists struggled to verify trending news topics in real time. The incident underscored the growing dependency on digital tools for instantaneous data and the cascading effects when such systems falter. Meanwhile, Google engineers worked behind the scenes to identify and fix the root cause, reassuring users of a swift recovery.

The recent disruption in Google Trends’ four-hour filter has prompted many organizations to reevaluate their dependence on singular data platforms. For industries like marketing and journalism, where timing is crucial, even short lapses can translate into lost opportunities and diminished competitive edge. This has led to a growing emphasis on building more resilient data strategies that incorporate multiple sources and fallback options, ensuring continuous access to critical insights regardless of service interruptions.

Moreover, the incident sheds light on the broader challenge tech companies face in balancing rapid innovation with operational stability. As user expectations for real-time analytics grow, the pressure to deliver flawless performance intensifies. Maintaining this balance requires ongoing investments in infrastructure, sophisticated monitoring tools, and robust incident response protocols. Google’s experience with the four-hour filter glitch serves as a case study in the complexities of scaling services to meet global demand without sacrificing reliability.

Finally, the outage has catalyzed discussions about digital literacy and user preparedness. While technology providers must strive for seamless service, users also benefit from understanding system limitations and developing contingency plans. Educational initiatives that promote awareness about potential disruptions and alternative data methods can empower users to navigate outages more effectively, reducing frustration and ensuring continuity in their work. This shared responsibility between providers and users is essential for sustaining trust in the increasingly digital world.

The Importance of Real-Time Data in a Rapidly Changing Digital Landscape

In today’s fast-paced digital environment, real-time data has become indispensable for informed decision-making. Tools like Google Trends allow businesses and media professionals to respond promptly to shifts in public interest and market dynamics. The disruption of the four-hour filter exposed vulnerabilities in relying heavily on single platforms for critical insights. Analysts emphasize the need for diversified data sources and backup strategies to mitigate risks associated with such outages.

Moreover, this incident has reignited discussions on the transparency and accountability of major tech companies managing global data services. Users expect prompt updates and clear communication when disruptions occur, helping them adapt strategies effectively. The episode also highlights the importance of continuous infrastructure investments and system redundancies to prevent future failures. As reliance on real-time analytics grows, so does the imperative for providers like Google to uphold stringent reliability standards.

The outage of Google Trends’ four-hour filter exposed a critical dependency many users have on real-time data analytics. For marketers and journalists, the ability to monitor emerging topics within narrow timeframes is essential for timely decision-making. Interruptions in such services can cause cascading effects, delaying campaign launches, news reporting, and strategic pivots. This incident has prompted many professionals to reassess their reliance on single platforms and seek alternative or supplementary data sources.

Data reliability and uptime are foundational for any digital analytics service, especially those serving millions globally. Google’s extensive infrastructure usually ensures high availability, but even minor glitches can lead to significant disruptions. Users now expect not only service continuity but also proactive communication from providers during incidents. The delay in updates during the four-hour filter outage led to frustration and uncertainty, underscoring the need for better transparency.

Social media amplified user complaints and experiences during the outage, highlighting the wide-ranging impact across industries. Influencers, content creators, and analysts shared their challenges, creating a collective awareness of the glitch’s consequences. This public discourse pressured Google to respond more swiftly and communicate progress, illustrating how community feedback can drive improvements in service management.

The incident also raised questions about Google’s internal monitoring and alert systems. Experts speculate that enhancements in anomaly detection and automated fail-safes could reduce downtime and speed up issue resolution. Continuous investment in such technologies is vital as data volumes and user expectations grow exponentially. Google’s commitment to reviewing its protocols suggests a recognition of these challenges and a willingness to adapt.

For users, the outage serves as a reminder to implement contingency plans for critical data needs. This might include subscribing to multiple analytics platforms, utilizing APIs from different providers, or maintaining historical trend databases to bridge gaps during outages. Diversification of data sources can mitigate risks and enhance resilience against future disruptions.

The reliance on Google Trends reflects broader trends in digital transformation where instant access to information shapes business and media strategies. As such, the expectations for flawless service delivery are higher than ever. Failures, even brief, can damage reputations and impact revenue, motivating providers to adopt stringent quality assurance and disaster recovery practices.

Moving Forward: Enhancing Resilience and User Trust in Digital Analytics

Following the restoration of the four-hour filter, Google has committed to reviewing its monitoring protocols and improving its incident response framework. Proposals include enhanced user alerts during service interruptions and more detailed status dashboards to keep users informed. Industry stakeholders advocate for collaborative efforts between tech companies and users to co-create resilient platforms capable of withstanding growing demands.

As digital ecosystems evolve, the balance between innovation and reliability becomes increasingly critical. Users of tools like Google Trends seek assurance that their data needs will be met consistently without unexpected disruptions. This incident serves as a timely reminder for all involved to prioritize robust infrastructure, transparent communication, and proactive risk management to maintain trust in essential digital services.

Educational efforts aimed at informing users about potential system limitations and best practices during outages can also improve experiences. Clear guidelines on how to adapt when real-time data is unavailable empower users to manage risks proactively. Such transparency fosters trust and positions providers as partners rather than mere vendors.

Google’s handling of the outage will likely influence user confidence and brand perception. Swift restoration combined with meaningful improvements in communication and system robustness can turn a negative event into an opportunity for strengthening relationships. Conversely, inadequate responses risk alienating key user groups who rely heavily on these analytics tools.

The event also spotlights the importance of collaborative ecosystems in digital analytics, where providers, users, and third-party developers work together to create more resilient solutions. Open dialogues and shared feedback loops enable continuous innovation and responsiveness to evolving user needs. Building such communities can enhance overall service quality and user satisfaction.

Looking ahead, the growing demand for real-time data analytics will push technology companies to innovate beyond current capabilities. Artificial intelligence, machine learning, and predictive analytics may play larger roles in preempting issues and optimizing service delivery. Google’s recent challenges with the four-hour filter may accelerate investments in these areas, shaping the future landscape of digital trend analysis.

The outage has also highlighted the critical role of user feedback in improving digital services. Google’s responsiveness to complaints during the incident showed how active communication channels can help companies quickly identify and address problems. Encouraging users to report issues and share experiences creates a valuable feedback loop that drives continuous refinement and customer-centric improvements.

Many businesses have begun exploring hybrid analytics models that combine Google Trends data with insights from social media platforms, news aggregators, and proprietary databases. This integrated approach not only diversifies information sources but also enhances the accuracy and depth of trend analysis. The recent glitch has accelerated interest in such comprehensive strategies to reduce vulnerability from single-point failures.

The four-hour filter glitch also raised awareness about the complexity behind real-time data aggregation and processing. Behind the scenes, vast amounts of search data must be collected, filtered, and presented almost instantly, requiring sophisticated infrastructure and algorithms. Understanding these technical challenges can foster greater patience and realistic expectations among users, especially during rare service interruptions.

Google’s commitment to bolstering transparency with enhanced status dashboards and timely alerts reflects a broader industry trend toward openness. Providing real-time updates during outages empowers users to plan accordingly, reducing frustration and speculation. Such practices are becoming standard expectations for tech companies delivering critical online services.

Finally, the incident underscores the evolving nature of digital trust, where reliability and communication are as important as innovation. Users increasingly evaluate service providers not just on features but on how well they manage disruptions and engage with their community. Google’s handling of the four-hour filter issue will influence its reputation and user loyalty in a competitive analytics landscape.

Follow: Google Trends

Also read: Home | Channel 6 Network – Latest News, Breaking Updates: Politics, Business, Tech & More